Artificial intelligence (AI) technology is becoming ever-present, with content creators using it to save time on the process.

It sounds beneficial, however, AI has led to an alarming surge of fake content and concerns about disinformation.

Generative AI has elevated the art of fabricating reviews to new levels of sophistication and has enabled the writing of fake blog and social media posts that can read as if a real person wrote them.

This is all possible because of the machine learning software trained on human-made content, leading to some compelling results.

However, this means it has never been easier to publish mass amounts of fake news, as well as image and video deepfakes, with the ability to reach millions of people across platforms. In this blog post, we’ll explore what you need to know about AI and disinformation.

What is Disinformation?

Disinformation refers to false information intended to deliberately mislead people. Examples of this can include

- Phishing

- Hoaxes

- Propaganda

- Fabricated news stories

Misinformation vs Disinformation

Misinformation and disinformation are both considered fake or false news, however, there is an important difference between the two:

- Disinformation is a strategic and deliberate attempt to deceive audiences with fake information.

- Misinformation still refers to fake content, however, it is not a deliberate attempt to publish false information. The people spreading misinformation are not aware that it is fake.

Negative Effects of AI-Generated Content And Disinformation

According to MIT, disinformation generated by AI may be more convincing than disinformation written by humans, a new study suggests.

AI content generators are both cheaper and make the content creation process faster.. This content is also often indistinguishable from human-generated content, and can even be more convincing and engaging.

However, there are several drawbacks to AI-generated content which can affect false news and disinformation.

1. Lack of Human Touch

Artificial intelligence forms a mind of its own yet doesn’t hold the same values as people do. They lack emotional depth and empathy. A human-written article can also offer context, creativity, originality, and critical thinking on a subject or topic. Without a human touch, there could be important aspects of the tone of an article missing.

2. Biases

Most people wouldn’t assume that AI has biases since it isn’t human and doesn’t contain the same judgments. However, this couldn’t be further from the truth, since AI learns from content already created by people.

Plus, human beings have to decide which content and data to train the AI on, which itself is full of bias.

For example, if an AI only ever reads content with a particular political leaning, that’s all it will ever know.

Consciously or not, humans make content that is full of opinion and bias, and perhaps lacking in fact. Therefore, AI learns this bias and reflects it back to us in the content it produces. Over time, the content bias can become more extreme.

3. Further Promoting False News Online

Countless online articles already contain false information. Some site creators also pack their sites with ads, suggesting they intend to make money with algorithmically generated advertising. Since AI learns from current online content, anything that is online serves as the basis for future AI content. This creates a negative feedback loop, leading to further spreading disinformation online about a subject.

4. Overreliance

The human brain always wants to take the easiest route to solve a problem. This makes the use of AI incredibly tempting since it offers to take away much of our workload. However, if we get too used to artificial intelligence doing all the work, it makes us too dependent on technology.

Over time, we will stop questioning the content it creates for the sake of convenience. In the same way that we all depend on electricity in our daily lives, we may be similarly dependent on AI. We can either use this tool to our benefit or disadvantage, but we must not lose our critical thinking abilities.

5. Deepfakes

Deepfakes refer to artificially generated images and videos, often of celebrities, politicians, and public figures. These images and videos are often so realistic that it is impossible to tell whether they are real or not. As more people are able to access these AI tools, countless deepfakes circulate around the internet and social media.

People are already using deepfakes to support disinformation campaigns by fraudulently depicting people saying or doing things that have never occurred. Eventually, the spread of disinformation through AI could threaten our democracy and basic human rights.

AI and Disinformation Spreading False News

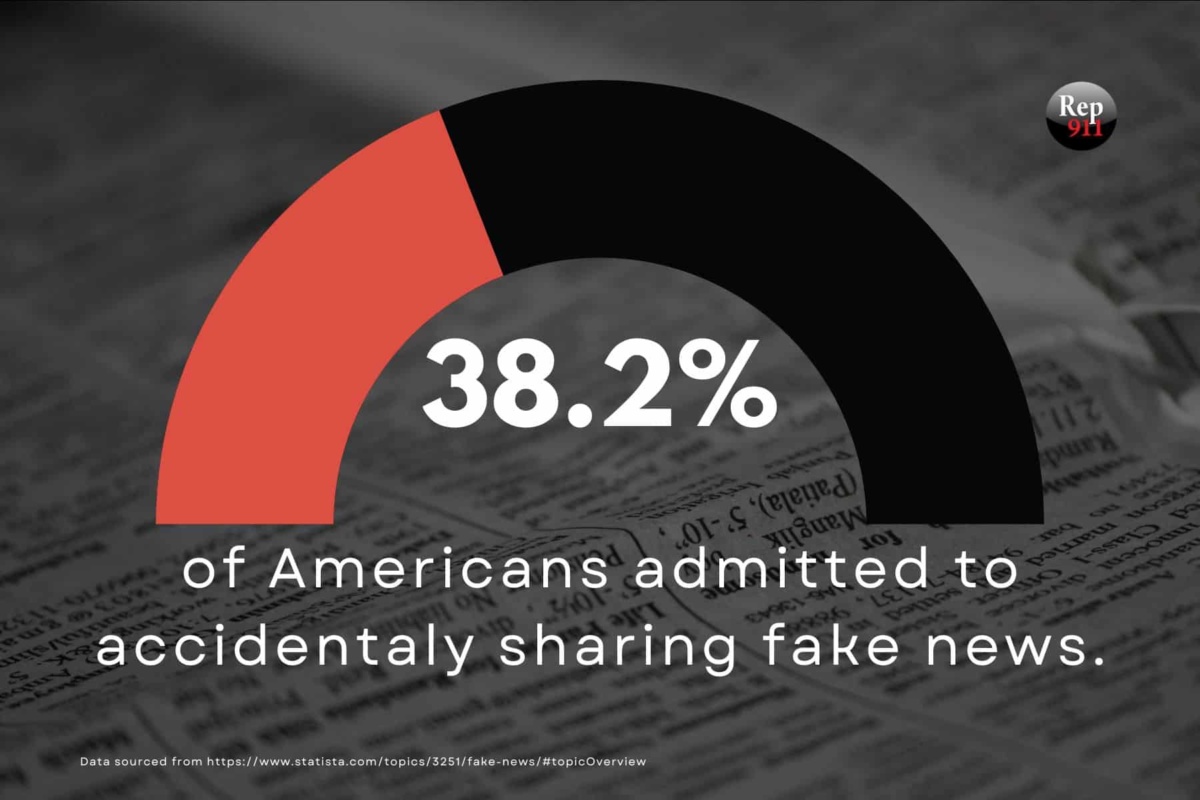

The spread of false news online starts when a person resonates with the information in the content. If the content confirms what they already believe, they won’t question the accuracy or validity of the information. Once they resonate with the content, a person will share that information online with their network.

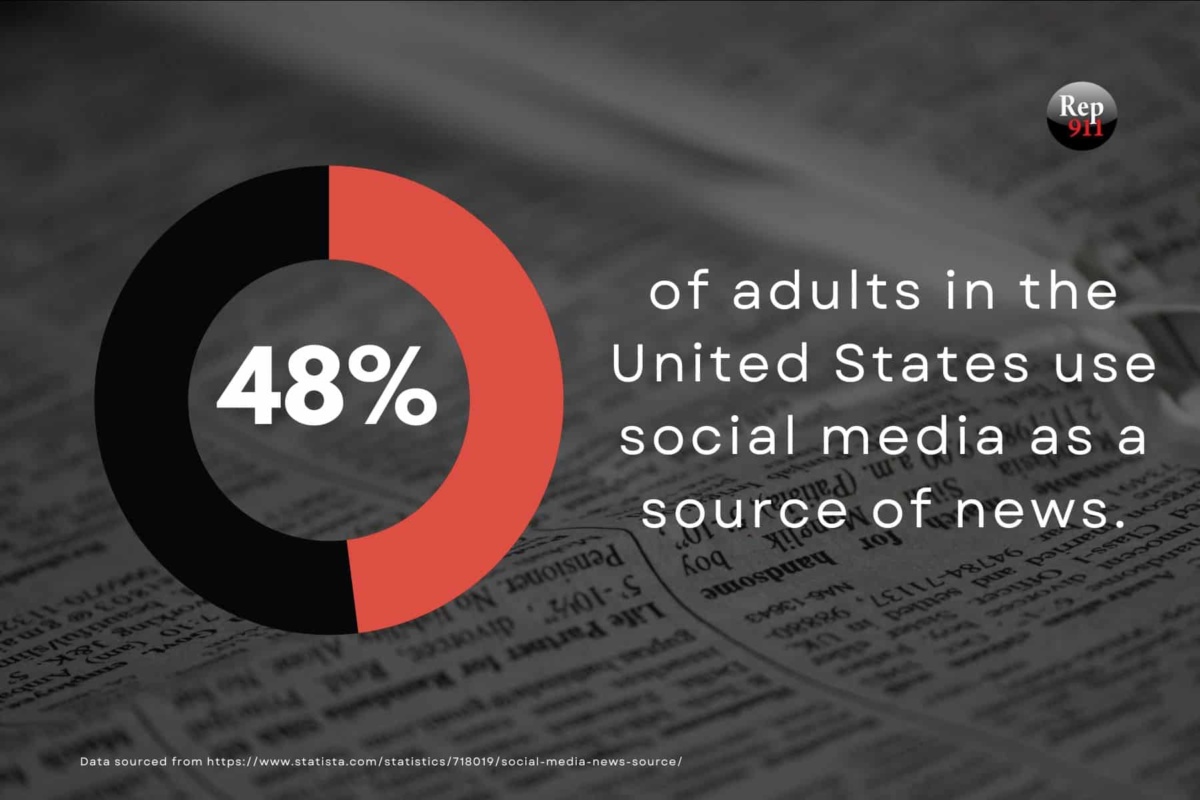

Social media often acts as an echo chamber that confirms our pre-existing beliefs. People tend to have a social network of people that share similar ideas. People also trust information more when it comes from others they know and trust. When one person shares something, their friends and followers will likely agree with the information as well.

What Can We Do about AI and Disinformation?

We all must take steps to address misinformation online. This includes companies that publish the content in question, as well as the consumers of the information.

Brands Publishing Content Online

Brands have an ethical responsibility to take ownership of the content they publish on their website, blog, and social media. They must create content that has original thoughts instead of relying on AI tools. Trying to cut costs by using artificial intelligence instead of human writers will not be worth it in the long term.

Social and Digital Platform Policies

Misinformation most commonly spreads through social media platforms when people decide to share the content. That’s why it’s vital for these platforms to enact policies that stop the spread of misleading or false news.

Platforms such as Meta and Twitter have policies that tackle this growing problem. Instead of removing false information altogether, the platform directs users to accurate sources. After that, it is up to the users to form their own opinions of the subject.

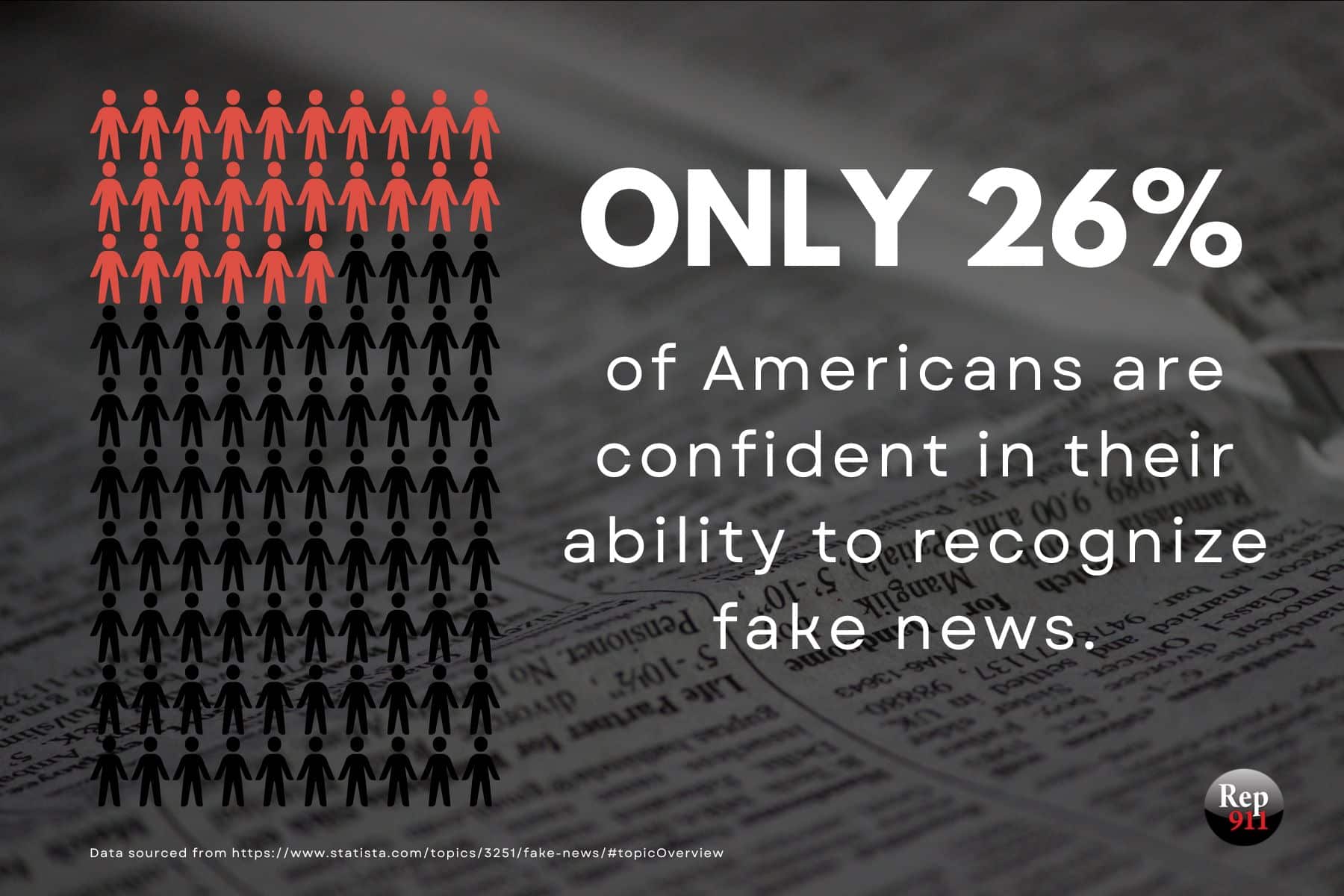

Online Consumers of Information

Individuals must educate themselves about misinformation and take steps to fight it. It can be difficult to spot AI-generated content, but here are some tips to detect misinformation:

- Know that AI-generated content is all over the internet, so you could be reading it and not know it.

- If a piece of content sounds robotic, AI might have written it.

- Whenever you read an article, fact-check the information using reliable sources. Don’t just read from sources that confirm your biases.

- Don’t get all of your news from the same source. In fact, it’s smart to read from news sources that go against your usual opinion. This will strengthen your critical thinking skills.

- No piece of content will ever be 100% unbiased. This means you have to think about who created it and why, and how that affects the information you’re reading.

Related: Search engine algorithms are increasingly using artificial intelligence to rank search results. Learn how to optimize (human-written) content with Generative Engine Optimization (GEO).

Political Action

Misinformation is incredibly damaging to the democratic process. When the public can’t discern truth from fiction, they are unable to make informed political decisions. Artificial intelligence tools mean that political misinformation and disinformation can spread much more rapidly than ever before.

Because of this, governments have been taking steps to tackle the spread of misinformation. As the public, we all have the power to get politically involved and speak out about this issue. If this is an issue you’re passionate about, call your representative and make your voice heard.

Closing: Misinformation and Reputation

Misinformation from AI-generated content can have severe and dangerous consequences for the reputation of a person, brand, or business. Companies must remain vigilant to ensure this information doesn’t damage their reputation.

If you have concerns about your online reputation, our expert team is here to help. At Reputation911, we offer personalized reputation management services for both individuals and businesses. Contact us for a free consultation.